I constantly see a desire to deliver enhancements and additional business capabilities to end users at a faster rate. What I don't see is a methodical and data-driven approach to achieving a faster delivery rate. I typically use a tactic called value stream mapping to improve clients' speed to market. That tactic seems obvious to me but isn't used as widely as I think it should be.

I'm going to define and illustrate value stream mapping for you in hopes that you see the value and understand the tactic well enough to apply it to your existing production processes and procedures. The concept applies to application features, infrastructure features, DevOps automation capabilities, and just about any type of information technology process I can think of. In fact, it applies to any business process I can think of, including those that aren't IT-related.

Example Application Delivery Value Stream

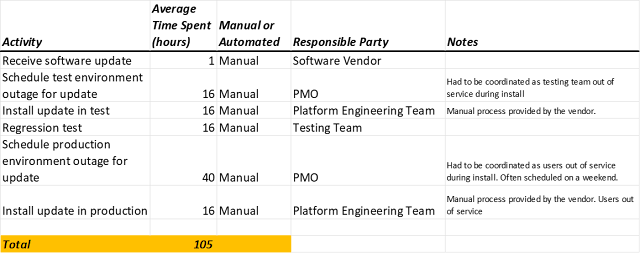

This is an example of the delivery process of a highly customized purchased application (COTS). As customizations were delivered by the vendor quite frequently, updates needed to be tested and deployed quite frequently. On average, the vendor supplied one to two updates per week. Each update required significant manual labor to test and deploy. The time and effort involved were costly and required tuning. We elected to do a value stream analysis.

Below are components of the value stream, along with how long manual time was spent testing and deploying them. Note that given the length of outage required for deployments, significant coordination with the testing team and business users was necessary and often extended the lag between updates received from the vendor and getting those updates into the hands of end-users.

We decided to automate the deployment process. The procedure given to us by the vendor was entirely manual. While the deployment process was only 32 clock-time hours of the total, decreasing that time to 4 hours allowed much greater flexibility in scheduling updates in the test environment as well as production. Now, updating installations for the test environment could be done off-hours without putting the testing team out of service. Additionally, production updates could be deployed off-hours and no longer require the weekend.

Lessons Learned

Automating testing would be the logical next tuning step. That said, automating tests for a COTS application is easier said than done. This particular application did not lend itself to easy UI testing in an automated fashion. As we didn't have access to product source code, testing service APIs wasn't an option either.

The value stream tuning effort illustrated here works for custom applications just as well as it did for this COTS example. The value stream tactic applies the tuning principle of optimizing the largest targets (those that take the most time) first. This principle is used when we tune CPU or memory consumption in applications. In fact, the principal can be used for non-IT processes as well, like budgets.

Value stream analysis should be an ongoing effort repeated periodically. Over time, your deployment process changes. When that happens, the value stream will also change.

As with other types of tuning efforts, it's important to identify a specific target. Like other tuning efforts, it's always possible to keep improving. Having a target allows you to know when the tuning effort is "done" and effort can be directed elsewhere.

Value stream analysis often reveals business procedures and processes that are not optimal. In another example from the field, I've seen DNS entries and firewall rule entries that are forced by organizational procedures to be manual and take significant amounts of lead time. It's important to track these activities in the value stream process as well. You need accurate information to make effective tuning decisions.

Thanks for taking the time to read my article. I'm always interested in comments and suggestions.

No comments:

Post a Comment